- Details

- Category: News

Recent updates on the ALOHA Use case aimed at improving the Surveillance of Critical Infrastructures (Correctional Facilities, Central Banks, Transportation Systems, Public Health Facilities, Energy and Water supply chains). Whithin the ALOHA project, PKE is working on Deep Learning based technologies to develop and improve intelligent video-based intrusion detection systems to secure such infrastructures. In this scenario, a currently open issue is the long time that lasts between the installation of a system at a specific site and the point in time at which it becomes reliable. Usually, an evaluation period of a few weeks up to multiple months is state of the art. In this period, algorithms are parameterized and tweaked for the optimal state. In the case of video surveillance for critical infrastructures, we deal with large areas and building complexes. Properly monitoring them may require hundreds or even thousands of cameras.

Read more...

- Details

- Category: News

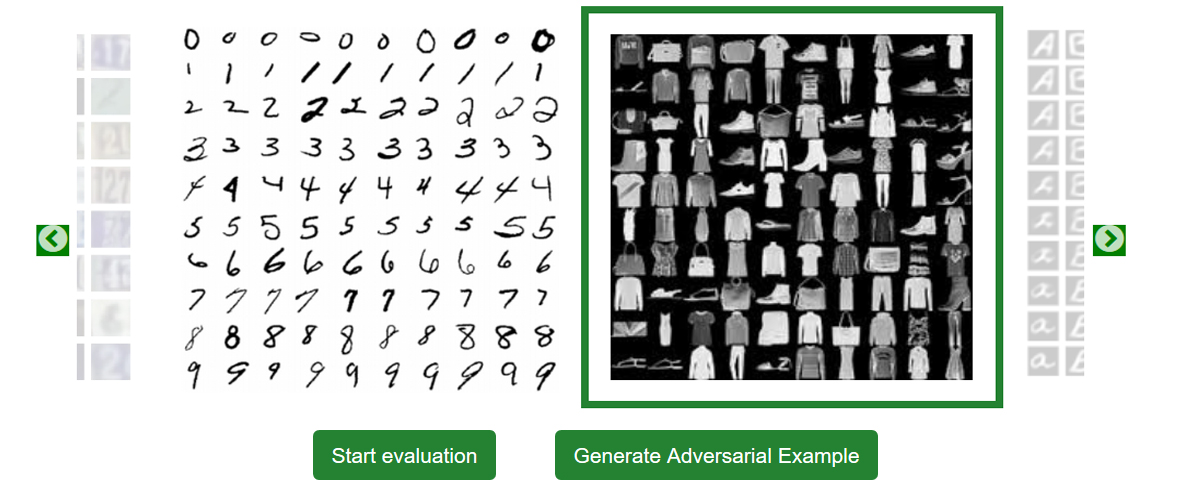

This web demo, developed with the support of European Union’s ALOHA project Horizon 2020 Research and Innovation programme, allows the user to evaluate the security level of a neural network against worst-case input perturbation. Adding this specifically designed perturbation is used by attackers to create adversarial examples and perform evasion attacks by feeding them to the network causing it to fail the classification. In order to defend a system we first need to evaluate the effectiveness of the attacks. During the security evaluation process, the network is tested against increasing levels of perturbation, and its accuracy is tracked down in order to create a security evaluation curve. This curve, showing the drop in accuracy with respect to the maximum perturbation allowed for the input, can be directly used by the model designer to compare different networks and countermeasures.