Recent updates on the ALOHA Use case aimed at improving the Surveillance of Critical Infrastructures (Correctional Facilities, Central Banks, Transportation Systems, Public Health Facilities, Energy and Water supply chains).

Whithin the ALOHA project, PKE is working on Deep Learning based technologies to develop and improve intelligent video-based intrusion detection systems to secure such infrastructures. In this scenario, a currently open issue is the long time that lasts between the installation of a system at a specific site and the point in time at which it becomes reliable. Usually, an evaluation period of a few weeks up to multiple months is state of the art. In this period, algorithms are parameterized and tweaked for the optimal state. In the case of video surveillance for critical infrastructures, we deal with large areas and building complexes. Properly monitoring them may require hundreds or even thousands of cameras.

This increases the need for accuracy as a large number of false alarms or missed real alarms will turn the system ineffective. In this context, as the number of system components increases, energy efficiency becomes relevant to limit the overall operational costs. Power consumption becomes a critical element when we envision to use such a system in a mobile configuration, for instance for large events.

Obviously, the security aspect plays a very important role in a surveillance solution. For instance, the resiliency to input manipulations is a critical aspect to consider while planning such systems.

Security surveillance camera (left) and control room with surveillance system (right)

Using deep learning-based solutions for safety and security applications is an upcoming trend in the market. Such solutions are expected to boost the state of the art significantly, which is noted by world market leader HikVision. The market for embedded devices with deep learning accelerators is very dynamic at the moment with many Smart Camera producers announcing new products or including such technologies in their development roadmaps. The SAST platform, developed by a Bosch backed startup is an example of effort in this direction.

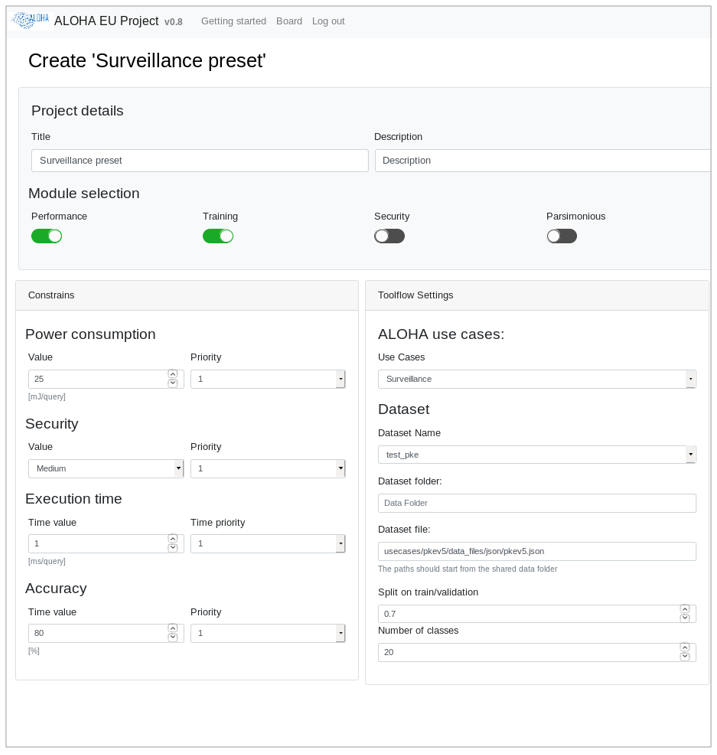

We expect the ALOHA Toolflow to improve our surveillance systems by providing efficient deep learning models tailored for our specific hardware needs. Another important expected benefit is the simplification of the design process as the ALOHA Toolflow is designed to automatically generate efficient deep learning models without the need of highly specialized knowledge.

In the surveillance use case, we are evaluating the use of the Toolflow to automatically design and train deep neural network models for object detection targeting smart cameras with dedicated deep learning accelerators.

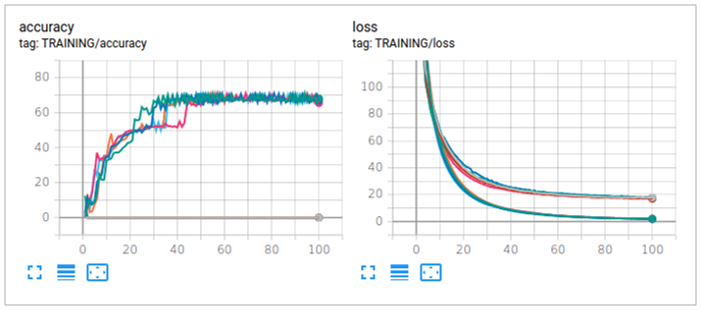

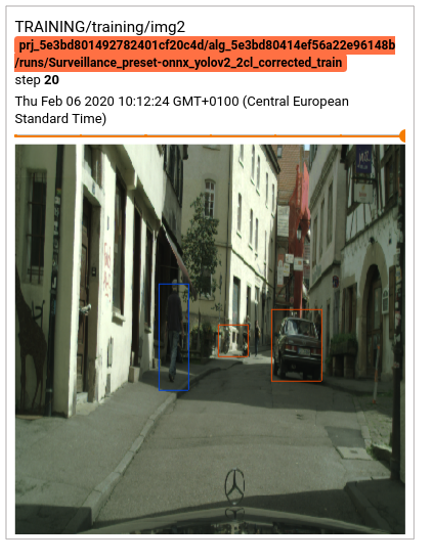

We use state of the art object detection DNNs such as Yolo as a starting point. Based on that, the Toolflow generates design points that are similar to the initial model but better suited to the target hardware platform, according to input constrains such as power consumption, accuracy and security. Those models are trained and analyzed by the Toolflow according to those criteria and the resulting trained model can be ported to the target platform.

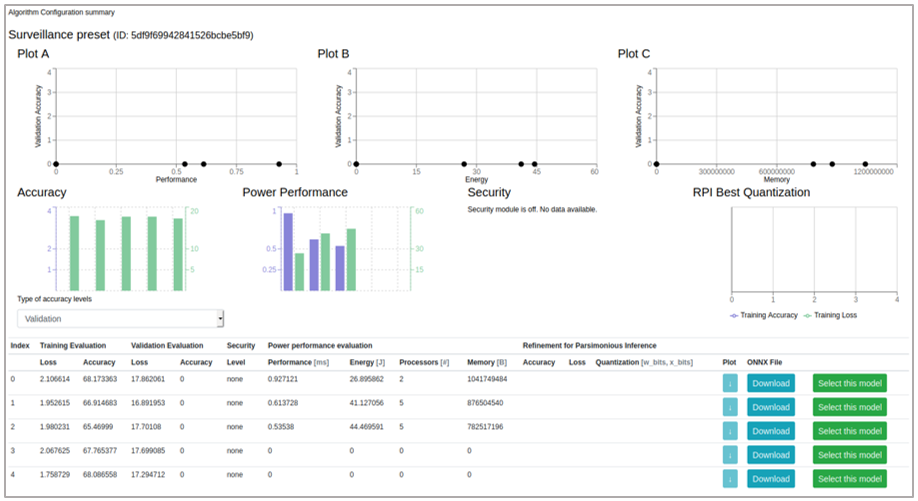

Toolflow surveillance project preset (left) and Surveillance use case Toolflow training outputs (right)

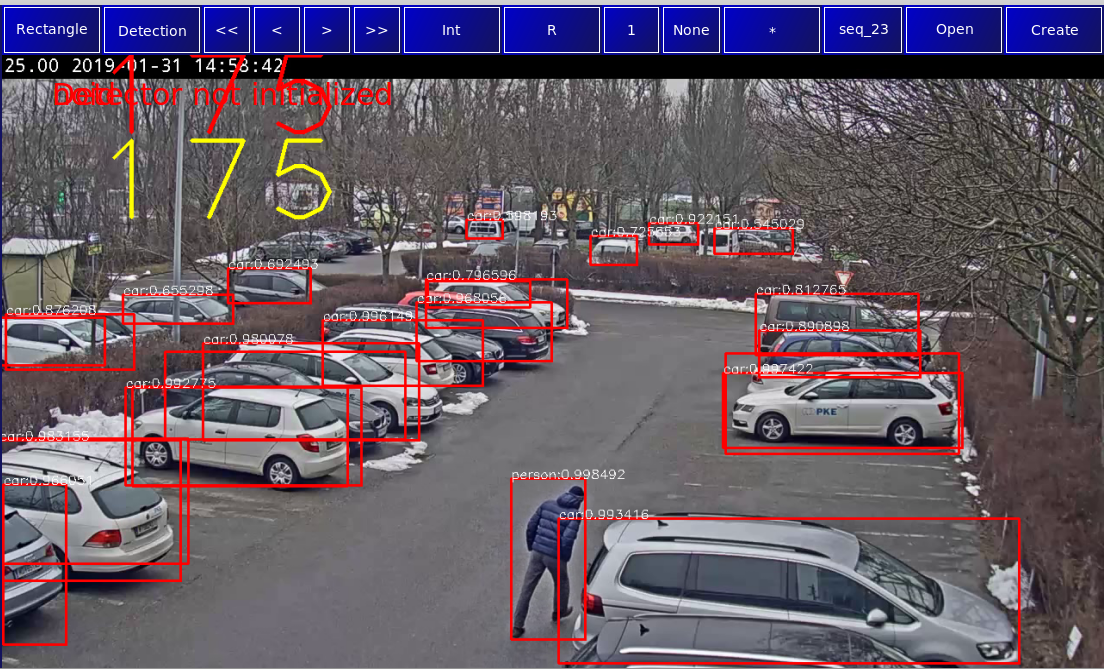

Visualization of detection results during training process (left) and Surveillance pre-set results (right)

As target platforms, we are evaluating the Xilinx Zynq-7000 SoC ZC706 and most recently, a smart camera with a Qualcomm hexagon 685 DSP featuring the android based SAST ecosystem for developing security camera applications.

SAST camera (left) and detection results from PKE dataset (right)

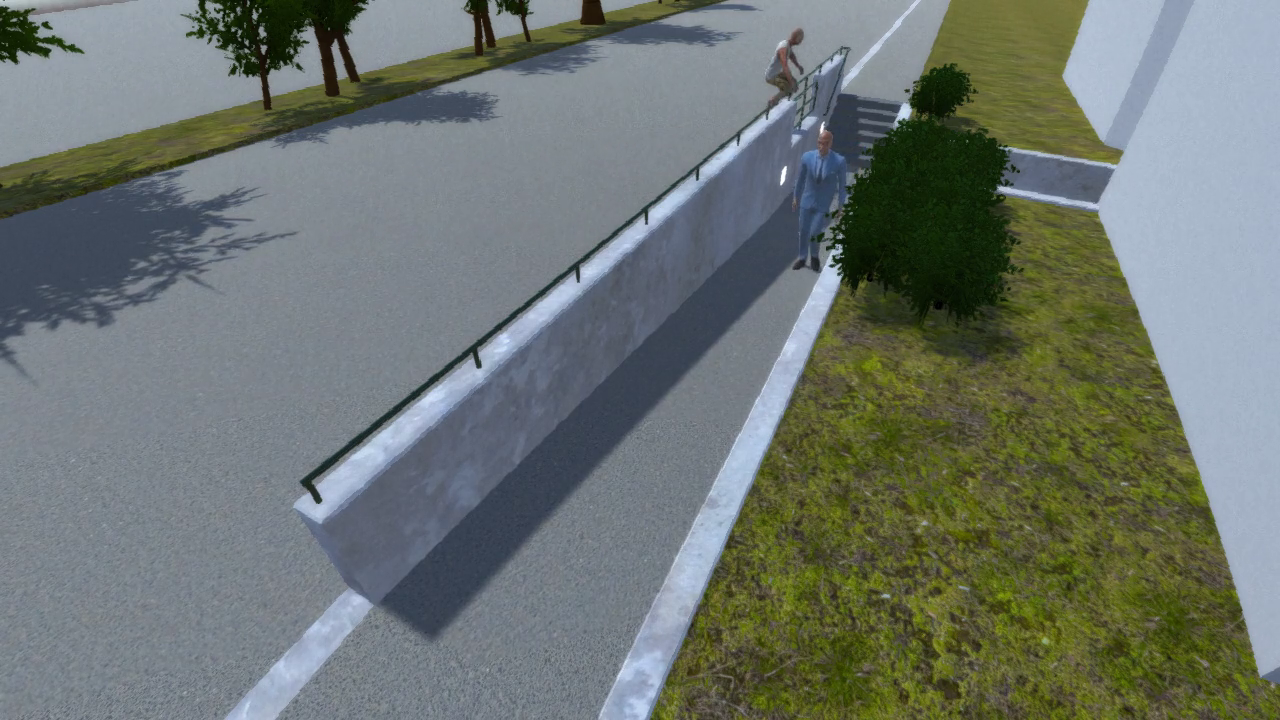

In the surveillance use case, we are also investigating the use of deep learning based behavior analysis to improve the quality of detections, reducing the number of false alarms. An important part of this analysis is the creation of our synthetic dataset for behavior analysis. With this concept, we want to mitigate the difficulty in acquiring a meaningful amount of positive data samples for training machine learning based algorithms.

Artificial images dataset for behaviour analysis